Editor’s Note: Our friend Russ White, a principal engineer at Ericsson, recently along sent this article on data storage, and we knew immediately that we wanted to share it with you. Enjoy.

There’s been a lot of talk around the shifts coming in storage, but for the network engineer it’s hard to understand and piece together the context. What really is going on in the storage world? Is there a model we can wrap around these changes that will help us understand them better? While I’m not a storage expert, and I don’t even play one on a podcast or blog, I have found some parallels with the past that might help engineers understand the probable future.

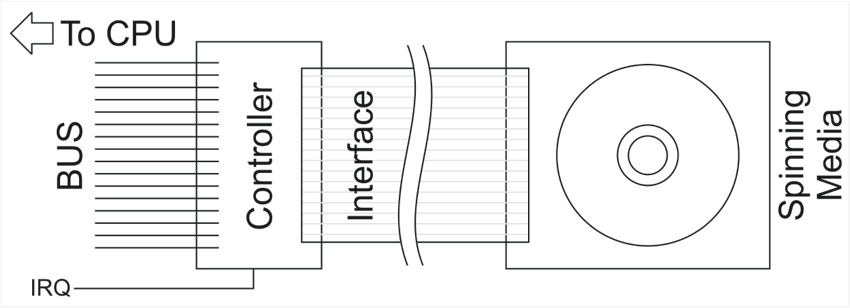

Back in the “early days” (that’s anything more than five years ago for most engineers), there were three storage access standards: SCSI, MFM, and RLL. The typical storage system, in the typical desktop computer, looked something like the figure below.

In this sort of storage system, the controller translated commands from the operating system to store and retrieve information from the disk drive into one of three different signals and put them onto the interface. The interface, actually a ribbon bus in most cases, carried these signals to the physical hard drive. The electronics on the hard drive did little other than position the heads and either read or write the data, as instructed by the controller. Where was data written? In what format was it written? That was all determined by the operating system.

We had huge wars over whether MFM- or RLL-encoded hard drives could store more data, were more secure, more reliable, more efficient, and all the rest. SCSI, in the desktop computer world, was a bit of an “also ran.” You could buy one drive and format it in different ways, depending on which controller you connected it to. The critical point to remember is that while MFM and RLL were data storage formats, SCSI was a signaling protocol, a little mini network that marshalled information onto and off the spinning media. This will become an important distinction in just a moment.

Over time, the controller became integrated with the spinning media of the hard drive, resulting in the Integrated Drive Electronics model. In this model, the controller was effectively moved onto the physical hard drive, making the controller into an interpreter between the system BUS and the hard drive directly. In other words, SCSI won — only we didn’t call it SCSI, and we didn’t use the SCSI signaling scheme. The controller became, in effect, a network node that marshalled and unmarshalled information, and the operating system began to see the storage as a black box, rather than as a set of electronics and encoding schemes.

Now we’re to the point where all we have is a wire — eSATA — and the long-term storage has become even more of a black box to the operating system. This new view of storage has enabled a lot of advances in the storage realm, from SSDs connected through eSATA to the newer formats, where the SSD is, in effect, connected directly to the system BUS through a really thin interface.

So what does all of this have to do with network storage, and the road ahead? I suspect we’re on the verge of an IDE/eSATA moment in network storage. If you think through what the big storage vendors do, there’s a lot of parallels between the old MFM, RLL, and SCSI controllers and the drive to network interface. There’s a spinning drive, and then there’s some magic hardware that makes the connection between the network, and a set of specialized protocols that carry the contents of the storage device over the wire to the user device (generally speaking, a virtual machine). The VM must have some software on board to connect to the storage device. While this software may be written to a standard, the storage device isn’t really a “black box.” Rather, it’s a piece of hardware sitting out there with a lot of exposed characteristics that need to be dealt with.

Taking the IDE example, I suspect the specialized interface is probably going to move into the drive, making the device a black box once again. Much like the transition to IDE, and then to SATA, then to eSATA, this will enable a lot of innovation in the storage arena on the hardware side of things. In a sense, everything will become network attached storage, because the network interface will be pulled closer and closer to the storage device until it’s flat out integrated with the storage device, leaving a fairly standard “black box” interface for users to touch.

There are some things, of course, in the networking world that might prevent full integration. For instance, data deduplication is a real problem in the more black box situation. On the other hand, there’s no real reason data deduplication couldn’t become a virtual service sitting “anywhere in the network,” fronting a lot of different devices (not just storage devices). This is pretty much the same job a web cache does today, only in the other direction. It seems all too possible to turn the direction around in a virtual service environment.

One such example of a move in this direction is the Seagate Kinetic line of devices. White box storage is one name for it, but the interfaces must move out of the specialized controller and into the drive (or a virtualized service) for this sort of move to take place.

Russ White is a 20-year veteran of the networking industry who has co-authored 10 books, is deeply involved in Internet standards and software innovation, and blogs at http://www.ntwrk.guru. He holds CCIE #2635, CCDE 2007:001, the CCAr, an MS/IT, and an MACM. Russ is currently a Principal Engineer at Ericsson.