I’m shocked. There is a reasonably good topic I failed to blog about!

It’s not like I didn’t write about it. I’ve expounded on this topic in several design writeups for customers. But I never adopted the idea in a blog format.

The title gives away the topic.

So: this blog takes a look at the (Cisco-centric) choices you have when revamping your data center: Cisco ACI or not, and VMware ESX or NSX. And how you use them. Similar logic may well apply to other vendors with data center fabric automation tools.

If you’ve already passed that decision point, this blog might provide additional perspective or even motivate you to change how you do things. Admittedly, deployed data center fabrics have a lot of “inertia.”

Why Use ACI?

ACI provides powerful automation that can now be extended to include the cloud. It can also provide security enforcement.

Consideration: ACI has its own nomenclature, which has different nuances than the data center switch CLI. “Bridge domain” is the leading one. Thus, there’s some “mapping” of concepts to learn that accompanies learning to use ACI’s GUI. This is also true when using ACI to configure networking in the cloud. What does it end up doing “under the hood”? What’s the best way to see what has been built?

Alternatives: DCNM (now renamed Cisco Network Insight Fabric Manager), or CLI / roll your own automation of Nexus switches. For cloud, you might stick with native tools to reduce the amount of ACI to native feature “translation” and possible problems.

To me, there are two ways to use ACI and the fabric it builds. One is as a fabric automation tool supporting switches that physical hosts attach to. The other way is to use ACI as a whole-data center controller, with entities like physical or virtual firewalls tightly coupled to the fabric, as opposed to being connected “at arm’s length” via an L2 or L3 “OUT” external connection and separate switch(es).

With the tightly coupled approach, you have the opportunity to use service insertion (in effect, policy-based routing) to force selected traffic through firewalls or other attached devices, which might have the advantage of allowing simpler automated insertion of firewall rather than ACI policy enforcement where deemed necessary. This could, in some use cases, mean less traffic going through a firewall for costs savings. But it also means there is more specialized security forwarding logic in ACI, along with greater dependency on ACI.

I personally favor the “arm’s length” approach, where L3 switches that act as data center routers, and firewalls, etc., connect via L3 switches that are not part of the ACI fabric. The reason is that I consider this more modular. It separates the routing and firewall functions from ACI to a degree. It diminishes the amount of forwarding decision-making in ACI. ACI becomes the server farm and appliance-attachment fabric, a somewhat more limited role. As compared to the “humongous virtual switch/router that everything connects to.” Another way to put it: this role is that of a switch-based spine and leaf fabric with no more ambitions.

Yes, doing this means buying and deploying at least a couple more high-speed switches. (Inside and outside, in effect, although the two roles can be combined into a single pair of physical switches, by using inside/outside VRFs or just routing. But it also limits the role of and blast radius for ACI.

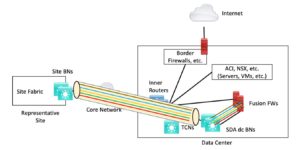

Here’s a diagram from a prior SD-Access blog showing this. The colorful part is SD-Access Transit tunnels carrying traffic to data center border nodes and fusion firewalls. Note the Inner Routers serve as data center hubs, interconnecting the rest of the network (DWDM core), SDA headend, ACI and NSX server world, and the edge complex.

The “hard-core ACI” or “tightly coupled” approach replaces the inner routers by connecting everything through ACI instead.

In the first case, user to Internet traffic might work even if the ACI fabric is rather messed up. Or not, depending on where you connected any necessary services. In the second case, if ACI is having a bad day, everybody is having a bad day. Even more so than in the first case. Words like “failure domain” and “blast radius” apply.

ACI and VMware

For what it is worth, ACI has historically played fairly well with VMware ESX/ESXi. A modest amount of integration allows the setup of policy re VLANs, IP ranges in ACI with the automated configuration of them in ESXi.

https://www.ciscopress.com/articles/article.asp?p=3100058&seqNum=2

Why Use NSX?

There has been some marketplace motion from VMware ESXi to NSX. Since doing so involves handing fairly large amounts of money to VMware for licensing (or did, the last time I checked), there must be a compelling reason!

ESXi lets you virtualize your fabric while connecting it at Layer 2 to, e.g., an ACI fabric. It is (was?) the most common way to deploy VMware. A rather simplistic description is that ESX/ESXi lets you run virtual VLANs in VMware supporting your virtual machines (VMs) and extend the virtual networking into the physical world. All the routing typically lived in the physical world. This approach basically shifted some physical servers into VMs on VMware ESXi hosts.

NSX adds increased virtualization. With NSX, the VMware admin can stand up their own VXLAN virtual LANs that tunnel independently across the physical switching infrastructure to carry traffic between physical NSX hosts. NSX can provide ACL enforcement as well as integrated third-party virtual firewalling. And can provide virtual routers, edge firewalls, load balancing, and other services “at the logical edge” to connect the whole NSX virtual world to the physical world.

Put differently, NSX allows you to build an “in-house cloud” that securely carries its own traffic over tunnels on top of any physical infrastructure, with discrete edge hand-off to the physical side of things. (Or not, e.g., tunneling between sites.)

NSX does allow you to operate more like ESXi, with physical side VLANs and VXLAN connecting the various NSX hosts. But this means both teams have to be involved in troubleshooting when problems arise. I see this as an evolutionary step between ESXi and “full” NSX. It does allow NSX’s distributed firewalling to be leveraged. And squeezing as much L2 VLAN technology out as possible likely increases robustness. It also puts more of the functionality in the hands of the VMware admin – no need to coordinate on physical VLANs once the initial setup is done.

The distributed firewalling scaling seems to work fairly well. Firewall instance on each VMware host in the cases I’ve seen. Don’t expect to save money by doing that (based on the last pricing drill I did).

In contrast, I personally consider the whole edge or border function in NSX to be the weakest part of the product. If the entire VM world is supported by tunnels over the physical underlay, there needs to be some way to escape the overlay world and get to the physical devices and the rest of the network.

NSX edge entails having basic software-coded routers, firewalls, etc., typically running on dedicated server hardware. If you have multiple VRFs / tenants, more capacity can be advisable for good scaling. It may require deploying a lot of server hardware to get the capacity a fairly inexpensive Layer 3 switch could have provided. Is that a good trade-off?

As soon as you try to use a stateful feature (stateful edge firewall or NAT or load balancing), you cannot do ECMP, and your edge capacity becomes somewhat more limited. All this may have gotten somewhat better since the last time I worked through it with a customer and VMware design team about two years ago. For small to medium size data centers, it may not be much of an issue. For bigger, as in many x 10 Gbps links, let alone 40 or 100 Gbps links, it might be a serious issue. 400 Gbps???

Viewed differently, for companies where the aggregate server to rest of network traffic is under 10 Gbps or a small multiple thereof, NSX edge is likely not a problem, although you still need to pay attention to the details. For bigger firms, you’d best do your homework to avoid bottlenecking. (The split at 10 Gbps may have increased some as servers have improved, but you get the idea.)

Which, or Both Together?

When both products (ACI and NSX) are under consideration, network versus server team politics often comes into play. And budgets.

Most sites now have 70 to 90% or more of their server platforms virtualized. NSX has a lot of positives from the VMware admin point of view. From the networking point of view, the one scary thing about NSX is that edge connection running BGP and doing firewalling and load balancing – skills and ability to troubleshoot being the primary concerns.

Also, as previously noted, to get high capacity, that edge complex eats servers. Cheaper than a self-contained router or L3 switch? Including maintenance? If one fails, the VMware admin has some work to do, building a new one. Compared to grabbing a spare switch etc.?

The biggest issue with using the two products together is that both offer policy enforcement (ACL’s if you wish).

But with most VMs in VMware, it generally makes sense for policy enforcement to be done there. Indeed, VM to VM traffic may not exit NSX, and due to being tunneled over the physical / ACI fabric, ACI and physical firewalls cannot provide enforcement for it.

All ACI can possibly enforce is any traffic where one or both endpoints are physical devices connected outside NSX.

In short, the argument in favor of “micro-segmentation” security via NSX is generally more compelling. Assuming the organization feels the functionality NSX provides sufficient efficiency and other value to offset the licensing cost.

The challenge is that purchasing and licensing both products is costly, as is the learning curve for each. Is there sufficient justification for ACI? The marginal controller costs are fairly low. That leaves licensing costs.

Generally, when both products are in use, ACI is used primarily to automate the fabric and possibly to provide security policy enforcement for traffic to or from the physical servers and other devices attached to the fabric.

As the scale of the data center fabric increases, ACI or another fabric automation tool becomes increasingly useful. How useful and costly, compared to other data center fabric automation and management tools, is what you’ll have to decide. Also, learning curve: how much time will it take to master ACI? How does that affect finding staff?

And The Cloud?

Both ACI and NSX let you use the tools (or similar tools) to manage cloud presence and networking.

ACI says pretty clearly that it only does Layer 3 to AWS or Azure. While you have an EPG that is “stretched,” the ends need to use different IP subnets.

Some light research indicates NSX can do L2 to the AWS VMware cloud or to Azure, although that is a fairly unnatural act. Cisco’s documentation makes it clear the VPCs and VNets are not normally extended at Layer 2.

https://docs.microsoft.com/en-us/azure/vmware-cloudsimple/migration-layer-2-vpn

I’ll note the Azure document says, “Migration,” i.e., a weak hint perhaps that L2 extension is not considered a production solution. So yes, in principle, you can apparently use VMware L2 VPN tunneling to extend L2 between data centers or into the cloud, or between cloud instances.

I and several people I consider experts consider extending L2 between data centers a terrible idea, as it greatly diminishes the robustness of the application and related network components. Two nearby data centers, not great. Datacenter to the cloud, quite possibly more risk, more ways to fail. More than two sites connected at L2: a really bad idea. Yet three sites on extended L2 happens. For example, when “migration” stalls. Nobody ever prioritizes getting rid of the last “legacy hosts” in “the old site.” So, it sits there for years, putting the main production sites at risk.

The alternative perspective is that organizations may well have poorly designed applications or HA/DR strategies that require such L2 extension. In which case, NSX provides one way to get there. (Also known as “more rope to hang yourself with.”)

Conclusions

There isn’t an easy answer here.

Discussions I’ve seen have (to me) had a feel of each team (network and server/app) wanting to upgrade to “the latest and greatest.” At some point, you have to do so or get left behind. The focus should be more “what value is this providing the business and my team’s management capabilities.”

With that reality check in mind, I’d encourage considering what you need compared to what each product provides, and what your priorities are. It is very seductive to spin up multiple firewalls in NSX for distributing the processing. It may not save money. But it does put the distributed firewalling in close proximity to the VMs, which is attractive.