You have a disaster recovery plan and you’re ready for that unexpected event that takes out your primary data center and your ability to conduct business. But are you really ready? Let’s learn from some examples of less-than-stellar disaster response incidents.

Business continuity, disaster planning [1], and IT security response planning are inter-related aspects of keeping business systems functioning in the face of major disruptions. Disasters tend to be local or regional and the pandemic has taught us that they can be global. We’ve included IT security in the mix because the response planning [2] is very similar to other forms of disaster planning. Both business continuity and disaster recovery planning are well covered in other articles, therefore we’re going to focus on tips to include in your planning process.

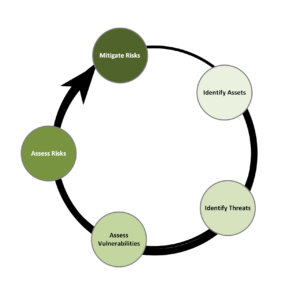

Threat Analysis

Disaster recovery planning is really about analyzing the potential threats and making sure that your plan accounts for those threats. Disasters can range from man-made disasters to natural disasters. By analyzing threats and their impact on the business, you can be prepared to satisfactorily handle them.

Threat Analysis and Mitigation Cycle

Threats can be short duration or long duration, depending on the extent of damage to the infrastructure. The extent of damage is driven somewhat by the size of the disaster. Is it local, regional, national, or global?

You should already have a good plan for handling common failures:

- ISP failure – rely on redundant service provider connections with good monitoring so you know when one has failed.

- Cloud provider outage – replicate your cloud services deployments to multiple availability zones in each cloud provider.

- Power feed failure – the most common, highly-impacting failure is the feed from the power grid. A UPS and backup generator are the typical solution for many data centers.

- Air conditioning – divide the load into segments and avoid single points of failure like shared chillers and pumps.

Threat Analysis Failures – Learning from Others’ Mistakes

Even good planning can miss fundamental changes in the business and non-obvious failure modes. It is instructive to examine some examples of poor threat analysis and testing in order to learn from the mistakes of others. We’ve seen many of these examples directly in our network assessments. Business leaders may need to ask detailed questions to determine if the level of testing is adequate for your business.

Ensure adequate Contingency Planning and Implementation

Poor planning or a poor implementation can invalidate an otherwise acceptable contingency approach. Sometimes it is the small things that shutdown the business. Other times it is an inadequate or inappropriate implementation.

- Downstream effects can ripple upstream – A manufacturing company needed to upgrade the network device that provided connectivity for the shipping dock printer. Instead of purchasing a new network device, the decision was made to upgrade the existing device. The upgrade took about 15 minutes, during which the loading dock filled with products that couldn’t be shipped until the printer could print the bills of lading. If the upgrade had failed or taken longer, the production line may have had to be shut down at great cost to the business. Instead, the IT team could have purchased a replacement device, properly configured for the job. The change would have then taken a few seconds, followed by a few short commands to verify functionality.

- High-availability application design isn’t high availability – During a network assessment we found that a highly available application was designed with two data centers, separated geographically. Unfortunately, a detailed examination determined that the second data center simply forwarded data to the application servers at the first data center which used a single database. Upon investigating further, we found that the application had never successfully been migrated to the backup data center. The business should have taken one of two approaches. First, re-architect the application to allow either data center to receive transactions, known as active-active mode. (See Active-active data centers key to high-availability application resilience [3].) Second, they could have worked to make sure that the main application could fail over to the backup data center within a short time.

- Secure your backups – Would your business survive a determined ransomware attacker? One organization thought they were safe because they performed regular backups and had verified their validity. An attacker obtained access for several months and discovered how and where the backups were done. The attacker encrypted both the online data and the backup files, effectively making the ransom payment the only way to quickly recover. Follow our Seven Tips for an IT Security Foundation [4] to help alleviate cybersecurity risk.

Verify Complete Test Coverage

You have to test everything. This takes thought and many IT teams work in fire-fighting mode that detracts from the thoughtful process that thorough testing requires.

- Test everything – One organization’s technical team was afraid that the migration of the key business application would fail, so they tested everything but that application. The primary data center remained active and only secondary functions were migrated to the alternate data center. The technical team operated primarily in fire-fight mode and didn’t feel like they had the time to adequately prepare for the test while trying to keep up with daily operations. They should have taken the time to determine a safe test plan that would demonstrate total fail-over capability, perhaps by hiring consultants to handle the additional work.

- Mimic the recovery when testing – In another example, an organization prepared for the test by copying data to the alternate data center because it was easier than working from backups. Unfortunately copying data won’t be an option if the primary data center is destroyed or unreachable (think fire, flood, bomb, etc.). Don’t rely on anything in the ‘simulated-failed’ data center. Use only backup copies of applications and data to bring up a data center from lights-out to fully functioning. You’ll quickly discover complex interdependencies that hinder recovery. Example: a network storage system can’t start without DNS and DHCP, but the IP address management system’s files are in the network storage system, creating a circular dependency.

- Ensure network resilience – Consider conducting network failure testing where a device or link is shut down and the network’s response is evaluated. We’ve seen networks that have expanded over time to the point that when a small failure happened, the entire network wouldn’t recover. It required shutting down all the network equipment and bringing them back up a little at a time. The solution was to redesign the network to break it into zones.

High availability networks [5] and reliable network services [6] rely on a dual-core design to achieve reliability and resilience. These designs protect against single points of failure as well as facilitating maintenance (hardware, software, and configuration upgrades). Network routing controls automatically steer application traffic to either of two geographically separate data centers. For example, the big credit card processing companies can switch between data centers within seconds under automatic or manual control.

It also makes sense to use automated network testing tools [7] to actively identify network problems multiple times a day, even when the key applications are not in use.

Include Supporting Infrastructure and Facilities

Don’t overlook the supporting infrastructure and facilities, such as the ones in our next examples. Cooling systems, power systems, and the location of facilities can have serious repercussions for ongoing operations in an emergency.

- Perform complete tests of the backup power system – There are many, many stories of backup power failures, even in facilities that are designed to prevent them. (Check out an analysis in At scale rare events aren’t rare [8].)In one example, a backup generator successfully started subsequent to a power failure. However, an auxiliary generator that provided power to the main generator’s cooling system didn’t automatically start and hadn’t been included in the manually initiated tests. The main generator overheated and shut down. There are plenty of other stories about backup generators that don’t automatically start because the testing process had always been manually initiated, or generators where the fuel delivery service contract had not been renewed.

- Interrupted uninterruptible power supplies – Overcoming the Causes of Data Center Outages [9] states that UPS system failures cause most data center outages and that battery failure is a leading factor. We know of one instance in which a battery failure propagated to adjacent batteries and created a sulphur cloud that turned it into a hazmat emergency. To ensure that doesn’t happen, you should incorporate regular battery maintenance and make sure that all batteries are monitored for current, voltage, and temperature.

- Location, location, location – Examine threats to the physical facility. Hurricane Sandy nearly put one organization in the dark when flood waters reached within a few inches of cresting the entrance to the building power distribution facility, including backup generator, which was located in the basement.Is a facility in or near a flood zone? One-hundred-year storms are become more common, so be prepared for flooding in areas that have historically been safe.

Summary

Creating an IT infrastructure that is resilient to disasters is a detailed exercise. It requires the best people you can find – people who have seen common failures. Do a comprehensive system threat analysis and perform testing at least annually and be sure to test every possible point of failure.

References

[1] Business continuity vs disaster recovery: What’s the difference?

[2] 5 Ransomware Predictions to Ring In 2021

[3] Active-active data centers key to high-availability application resilience

[4] 7 steps for a network and IT security foundation

[5] High Availability Design Guide

[6] On Designing and Deploying Internet-Scale Services

[7] Automated network testing tools actively identify network problems

[8] At Scale, Rare Events aren’t Rare