This is the seventh blog in an Internet Edge series.

Links to prior blogs in the series:

- Internet Edge:Simple Sites

- Internet Edge:Fitting in SD-WAN

- Internet Edge:Things to Not Do (Part 1)

- Internet Edge: Things to Not Do (Part 2)

- Internet Edge: Two Data Centers

- Internet Edge: Double Don’t Do This

We now will turn our attention to Cloud and Internet Edge, which comes in a couple of forms.

The first form is: what you do to secure the cloud presence. What does a cloud “data center” look like?

Secondly, there is the local data center connectivity to the cloud.

- Any cloud connections via VPN might use an internal site to site VPN termination point (router or firewall) or could be configured on the Internet border router, or preferably on a dedicated VPN router in parallel with it, using a shared Internet connection.

- With dedicated links to the cloud provider(s), they too could be on the Internet border router or on routers in parallel with the Internet border router.

- Either way, what I see is that sites are mostly using separate routers for cloud connections. One advantage of doing so is you can fairly cleanly insert a firewall or other security monitoring device on the inside of such a router if you feel the need.

In general, for site-site VPN, I would tend to connect the inside interface to an inside/core switch, not anything in the DMZ. The outside connects to the Internet, either dedicated, or shared, or via the Internet router. If you’re just using the Internet router, Cisco FVRF (front door VRF) tied to a trusted VRF for the tunnel and inside interfaces works out nicely.

If you trust your cloud VPC instances, then that’s how to do things. If you don’t consider them “internal, trusted,” then you need to run the connection through a firewall somewhere.

- Concerning “internal, trusted”: You can view links to the cloud as part of a (mostly?) trusted WAN between data centers, sites, etc. Or you can firewall each end of such links – which gets costly and complicated. Local security policies will determine what you do for this.

- Treating each cloud instance as a remote data center means you firewall and otherwise secure Internet to cloud instance traffic at the cloud side of things.

Cloud Instance Firewalling

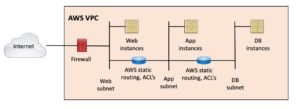

Everything I’ve seen on this topic treats a cloud VPC / VNET pretty much the way a physical data center is treated. You arrange the routing so that the Internet connection goes through a firewall or security ruleset and then gets routed out another interface to one or more private subnets that cannot be directly reached from the Internet. In other words, Internet to firewall outside interface, through the firewall, out the firewall inside / DMZ interface, and to the server workloads in the DMZ. The cloud providers have good documentation on how to expose your front-end web servers (public IPs, NAT, etc.).

If you like, you can put several cloud VPC instances on the “inside” of the firewall and use it to limit traffic between them (if any) – just like segmenting your data center with ACI or ACLs or firewalls. This is arguably a very good idea – while you’re restructuring, provide as much per-app (or small group of apps) isolation (segmentation!) as you can.

At some point, if you’re trying to scale that, you do an AWS Transit Gateway (or another vendor’s counterpart). One Internet front end, separate application segments (VPCs) on the back side. (See also the Cisco link later in this blog below.)

Rather than re-invent words and diagrams, I’ll refer you to the CSP’s documentation:

- https://aws.amazon.com/blogs/networking-and-content-delivery/deployment-models-for-aws-network-firewall/

- https://docs.microsoft.com/en-us/azure/cloud-adoption-framework/migrate/azure-best-practices/migrate-best-practices-networking#best-practice-deploy-azure-firewall

The basic diagram then is something like the following:

For High Availability, you do that in two Availability Zones. Or, depending on your application’s requirements, perhaps you do some form of global / DNS-based load balancing across a couple of regions.

Another decision point is using the CSP’s firewall versus using a virtual firewall from your favorite vendor. I have no strong opinion on that. Your security team probably does.

Taking That Further

Virtual CiscoLive 2021 presentation BRKSEC-1041 has some interesting slides on this topic. Its focus was the insertion of a virtual FTD firewall, but the sequence for AWS deployment had some other good ideas. The basic concept was how to share a vFTD deployment across multiple VPCs for different apps versus buying/deploying a separate HA vFTD pair for each VPC. The answer, put simply, was to front-end the app VPCs with the vFTD VPC. With an AWS Transit Gateway in between.

The virtual CL2021 BRKSEC-1041 presentation can be found at https://www.ciscolive.com/c/dam/r/ciscolive/us/docs/2021/pdf/BRKSEC-1041.pdf

Reaching the Cloud

The other topic here, which perhaps applies to the cloud side of things as well, is how to integrate the Internet connectivity with the “cloud WAN” (multi-cloud) connectivity.

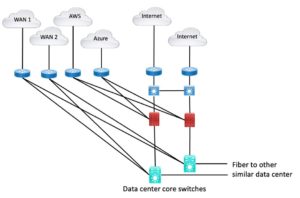

I see few surprises here. Here is a diagram showing one reasonably clean way to set up a physical data center for Internet Edge + cloud and WAN. (Yes, modified from a prior blog.)

Figure 2: Star Connect to Cloud, WAN, and Internet Routers

Figure 2: Star Connect to Cloud, WAN, and Internet Routers

And if your security team insists, that might instead look like the following diagram:

Figure 3: Star Connect + Firewalls

Figure 3: Star Connect + Firewalls

In the extreme case, maybe you even firewall the WAN too.

That last diagram probably looks a bit better if we consolidate firewalls, connecting to interfaces on them or via VLANs on switches and fewer trunk interfaces.

That looks like the following:

Figure 4: Shared Firewall Pair

Figure 4: Shared Firewall Pair

A variant of this might be to substitute traffic monitoring or other security devices for the cloud side of that diagram (e.g., monitoring traffic flows back to StealthWatch, for the cloud and WAN links/devices).

Leveraging A CoLo

More and more sites are using a well-connected CoLo site as a way to take advantage of the CoLo’s connectivity, especially if they are Equinix or offer Megaport services (which might loosely be described as portal-based “virtual WAN / Internet patch panel” for rapid service provisioning).

More specifically, putting a pair of core switches and border routers, as shown above, into a well-connected CoLo facility makes it easier / faster to add circuits or bandwidth.

This might be part of lift and shift or another cloud strategy. Put the above connections and devices in the CoLo, and then tie the data center core switches to the CoLo core. Done!

Some CoLo sites, notably some Equinix sites and those offering MegaPort services, have a web portal based fast-provisioning self-service system.

You get connected to their device, probably via a high-capacity L3 switch somewhere. That may take a bit of time to set up, like 48 hours, due to requiring physical cabling to patch you into their “big L3 switch” or whatever implements the portal-driven connections. You can then use the web portal to order up and pay for connections to CSPs, other CoLo sites for the CoLo provider, etc. The system automates setting up a virtual connection. You have to also get the other end of the connection set up on the CSP’s portal or by a partner. Then you have a virtual connection in an hour or less, rather than days/weeks/months. The slow part here is you, not so much the automation. Well, yes, Azure is slow getting stuff provisioned.

Equinix has extended that functionality, so you can order up a virtual router or firewall connected to ECX, and VPN or SD-WAN to it, to get a quick connection to the CoLo. I’m not tracking Megaport, but they may have something similar. Provisioning time: minutes. Have your credit card handy!

In the case of Equinix, their “virtual patching” system can then connect you to any other Equinix site as well, including business partners with an Equinix presence (and suitable Letters Of Authorization). If you like this, your main WAN becomes connections to Equinix sites for major sites and SD-WAN / VPN for other sites (still into Equinix, perhaps). With Equinix providing the backbone transport and Internet connectivity.

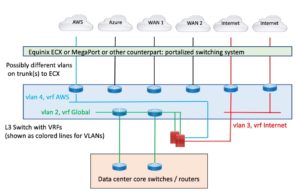

Something I noticed a couple of years ago: if you want to, a L3 switch that supports VRFs might meet your needs. That does assume you don’t require “fancy QoS” and crypto / VPNs: features the L3 switch likely does not support.

In that case, the border routers shown above might actually be VRFs in a single / pair of L3 switches. The outside circuits might be VLANs on one or two links to, e.g., the Equinix Cloud Exchange (ECX) device, where the portal patches them through to the CSPs, the Internet, and to other CoLo sites where other WAN hubs are located. You can even insert firewalls between the data center-facing side and the various remote VRFs.

Here’s an attempt at diagramming that:

Figure 5: Leveraging CoLo Portal for Connectivity

Note that the cabling from the blue to green rectangle is logical: VLANs on one or two physical links. The point being that getting the physical links in is slow, but then setting up dot1q based virtual connections via the portal is fast.

The same approach should work with MegaPort or others as well. (“Your mileage may vary.”)

Some explanation: the data center core to the L3 switch is routed links into a global VRF, which extends via VLAN to the firewalls. The firewalls act as fusion routing (and firewalling) between the various VRFs / VLANs, each of which would be connected to the firewalls. Thus, the firewalls control both data center to <whatever> traffic, plus inter-VRF traffic, e.g., between WAN and AWS or WAN and Internet.

(The slightly sketchy part there is perhaps the WAN connections. ECX can connect you to anything connected to any Equinix ECX location. You’d still need circuits (or VPNs) from regional sites into Equinix.)

I brought this up because Network As A Service (NaaS) has gotten a good bit of press lately. What’s not clear from skimming the vendors is how exactly your sites connect to the NaaS (“the sketchy part they don’t talk about”). The NaaS is just something like the above diagram, with static or virtual routers or something automatically provisioned and configured. (Virtual patch panel?)

Alkira also can provide virtual Palo Alto firewalls and plumb them in for you.

Your site to NaaS connections are then router-based or SD-WAN based VPN.

One key question to ask the NaaS provider about is transport costs: how much ingress or egress traffic to the NaaS will cost you if it is a VNF in the cloud.

Transit Gateways

My brain is wired to use AWS terms for some reason (first thing learned?).

Wouldn’t it be nice (simpler!) to have the same terms and behaviors across CSPs? I suppose the downside might be subtle differences in behaviors. Anyway, see also:

https://www.linkedin.com/pulse/aws-azure-gcp-virtual-networking-concepts-overview-mate-gulic/

At one point, I started to write a comparison blog indicating the diffs between the CSPs … was surprised at how few there were…. Then the above item showed up.

Anyway, AWS transit gateways and Azure VPN CloudHub are ways to connect up a bunch of inbound links (the WAN) and VPCs or VNets. Without getting into the non-transit connectivity when doing VPC (VNet) peering.

These gateways scale fairly well but do have scaling limitations.

Basically, they are approximately the cloud counterpart to what I just described, perhaps with some routing limitations.

Here are some links if you want to explore that further…

AWS Transit Gateway:

Azure VPN CloudHub:

These can also be useful just for connecting multiple VPCs (VNets) efficiently.

Please: do avoid duplicating internal/private addresses so you don’t have to redo everything to de-duplicate so you can interconnect VPCs. Convince your app/digital transformation team that they need to work with you on addressing, just like in physical data centers. Your organization may not have originally intended interconnection, but <drift> happens.

Put differently, if not coordinated and managed, the application or server folks may go wild with 10.0.0.0/24 or something in each VPC. And then want you to NAT to fix it, later. Best to get out in front of that!

What’s Different with Cloud

Short answer: not much.

There are likely no users in the cloud unless your users are doing remote desktop VDI in the cloud. Which I’ve already run into, it does interesting things to your onsite firewall rules.

I’m going to ignore Global Load Balancer and that whole topic – this blog is already too long.

AWS presentation on Best Practices:

I’ll note that the firewalls are there to serve at least two purposes:

- Control internal and external access to applications

- Monitor flows between locations

You might also have other firewalls or tools providing enforcement of segmentation and controlling inter-segment flows. (They don’t fit this Internet Edge story.)

For cost and labor reasons, it would be great to design to minimize the number of firewalls or firewall-like devices. Latency is usually why that is a hard problem.

Conclusion

We’ve seen that you can structure the cloud much like an onsite three-tier + firewall design.

When applications are deployed to the cloud in separate VPCs (VNets), gateways can be useful for connecting them, also for front-ending them with a virtual firewall to control inbound flows and likely inter-VPC flows as well.

The discussion included some discussion of CoLo-centric designs and alluded to NaaS designs.

I hope this gave you some interesting food for thought!